Large Language Models

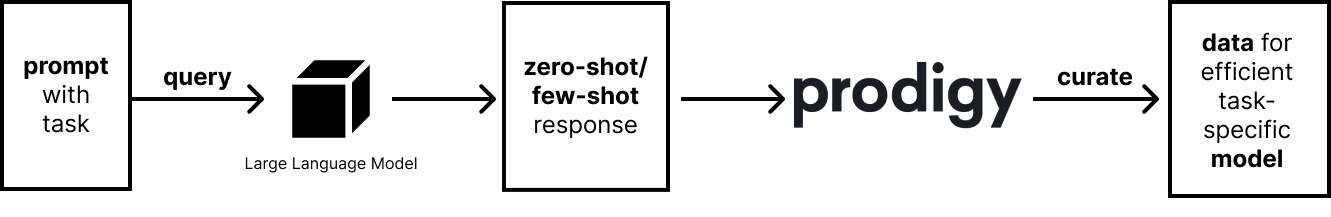

Nothing is stopping you from integrating Prodigy with services that can help you annotate. This includes services that provide large language models that offer zero/few-shot learning. Prodigy provides a few built-in recipes via the spaCy-LLM integration.

Named Entity Recognition

You can use ner.llm.correctto annotate examples with live suggestions from a Large Language Model. This recipe marks entity predictions and allows you to accept them as correct, or to manually curate them. Alternatively you can also choose to fetch examples ahead of time. The ner.llm.fetch recipe gives you the same suggestions but is able to download a large batch of examples upfront. These examples can then be annotated and corrected via the ner.manual recipe.

Both recipes can be used to detect entities that spaCy models aren't trained on and you're free to adapt the recipes. You can also improve the prompt by providing examples to do few-shot learning or by adding context to the prompt to explain the task.

Read moreExample

prodigyner.llm.fetchspacy-llm-config.cfgexamples.jsonlner-annotated.jsonl

Example of pre-highlighted entities by LLM

Example

prodigytextcat.llm.fetchspacy-llm-config.cfgexamples.jsonltextcat-annotated.jsonl

Example response from LLM with reasoning

Text Classification

The recipe textcat.llm.correctlets you classify texts faster with the help of large language models. It also provides a reason why a particular label was chosen. Just like the named entity recipes, you can also choose to fetch examples upfront instead via the textcat.llm.fetch recipe.

By fetching the examples upfront, you'll also be able to filter based on the LLM predictions. This can be incredibly useful when you're dealing with an imbalanced classification task with a rare label. Instead of going through all the examples manually you can only check the examples in which OpenAI predicts the label of interest.

Read moreGenerate terminology lists from scratch

There are many ways to use a large language model with zero-shot capabilities. You make predictions to pre-annotate examples, but you can also have it bootstrap terminology lists via the terms.llm.fetch recipe. These terms can be reviewed so they can later be used for named entity recognition, span categorization or weak-supervision.

Example

prodigyterms.llm.fetchskateboard-trick-termsconfig.cfg"skateboard tricks"

skateboard-tricks.jsonl{"text": "kickflip", "meta": {"topic": "skateboard tricks"}}

{"text": "nose manual", "meta": {"topic": "skateboard tricks"}}

{"text": "heelside flip", "meta": {"topic":"skateboard tricks"}}

{"text": "ollie", "meta": {"topic": "skateboard tricks"}}

{"text": "frontside boardslide", "meta": {"topic": "skateboard tricks"}}

{"text": "5050 Grind", "meta": {"topic": "skateboard tricks"}}