Prompt Engineering

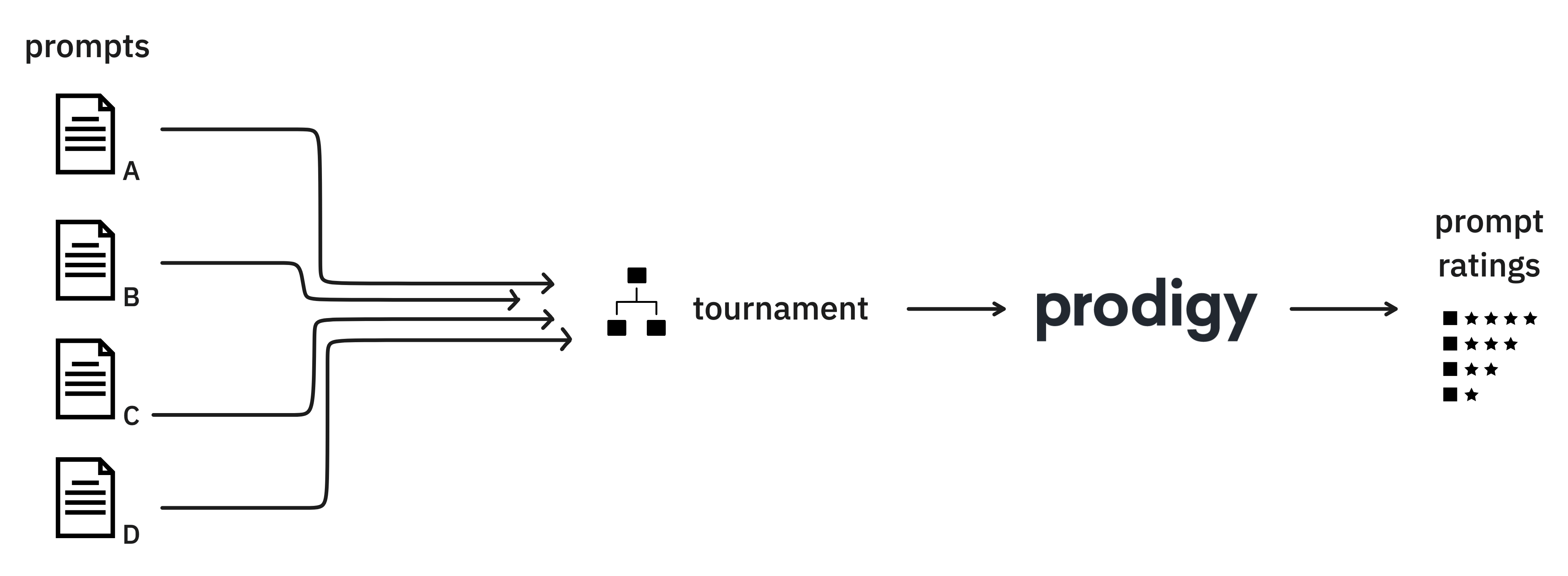

Prodigy supports recipes and tools for prompt engineering via prompt tournaments as of version 1.12. In these tournaments, your prompts will compete as you annotate the one that gives the best results.

Prompts as an A/B test

When you're engineering prompts you're going to get noisy results. In order to determine which prompt is best, you'll need a quantifiable method to compare them. Prodigy offers tools and annotation interfaces for this task and even offers pre-made recipes that integrate with Large Language Models via spacy-llm.

Example

prodigyab.llm.tournamenthaikuinput.jsonl./prompts./configs--display-template-path display.jinja2

Example of A/B prompt workflow

Compare two prompts

The ab.llm.tournamentrecipe allows you to quickly compare the quality of outputs from two candidates in a quantifiable and blind way.

prompt1.jinja2Write a haiku about {{topic}}.prompt2.jinja2Write a hilarious haiku about {{topic}}.input.jsonl{"topic": "Python"}

{"topic": "star wars"}

{"topic": "maths"}Read moreTournament of prompts

The ab.llm.tournamentrecipe also allows you to quickly compare different LLM backends by leveraging spacy-llm.

As you annotate you'll also get an impression of which prompt/backend combinations perform best.

Read moreExample

prodigyab.llm.tournamenthaikuinput.jsonl./prompts./configs--display-template-path display.jinja2=============== Current winner: [prompt1.jinja2 + gpt3.cfg] ===============comparison prob trials [prompt1.jinja2 + gpt3.cfg] > [prompt1.jinja2 + gpt4.cfg] 0.50 0 [prompt1.jinja2 + gpt3.cfg] > [prompt1.jinja2 + gpt4.cfg] 0.50 0 [prompt1.jinja2 + gpt3.cfg] > [prompt1.jinja2 + gpt4.cfg] 0.71 1 ...after more annotations ...============== Current winner: [prompt1.jinja2 + gpt3-5.cfg] ==============comparison prob trials [prompt1.jinja2 + gpt3.cfg] > [prompt1.jinja2 + gpt4.cfg] 0.55 23 [prompt1.jinja2 + gpt3.cfg] > [prompt1.jinja2 + gpt4.cfg] 0.82 19 [prompt1.jinja2 + gpt3.cfg] > [prompt1.jinja2 + gpt4.cfg] 0.91 12